GrappleSim

GrappleSim / BJJRL is my attempt to answer a big question:

Can an AI actually learn Brazilian Jiu Jitsu? Not just mimic basic movements, but start to develop strategies, chain attacks, and deal with the chaos and subtlety that make BJJ so addictive in real life.

Why tackle this?

Because it’s hard. And if I can even partially pull it off, it’ll be insanely cool.

I’ve been training Brazilian Jiu Jitsu for almost two years. What’s fascinating about BJJ is just how deep and complex it is. There’s the surface layer of techniques—guards, sweeps, pins—but once you dive in, you realize how much of it is about timing, pressure, leverage, and a kind of spatial awareness that you can’t really pick up just from watching. To really “get” a move, you have to feel it, drill it, see it from every angle, and adapt on the fly.

This got me thinking: Could AI ever learn jiu jitsu? Not just “move an arm here, leg there,” but actually roll—anticipate, escape, counter, invent? If we could do that, it wouldn’t just be fun to watch, it could help break down techniques, invent new ones, or even power a next-gen BJJ coach that analyzes your rolling footage.

But here’s the challenge:

- Video-based pose recognition tools like MediaPipe are decent, but they break down fast when bodies are tangled (which is literally all the time in BJJ).

- There’s no public, labeled BJJ pose dataset—collecting one would be a massive project.

- Building a full physical simulator from scratch would be a lifetime project on its own.

That’s when I stumbled across GrappleMap by @eelis: a one-man project mapping out hundreds of positions, transitions, and submissions in BJJ, complete with a data format for storing joint positions and graphing how you move from one to another. Insanely impressive work. GrappleMap gives me a foundation—a map of the BJJ universe—to build on top of.

Working with the Dataset:

Parsing GrappleMap’s data was its own adventure. Each position is stored as a set of joint coordinates in a compressed format.

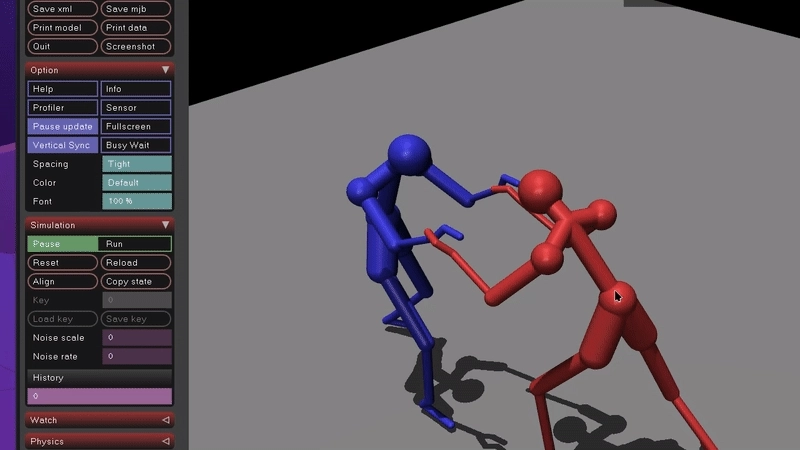

I wrote scripts to decode these into 3D joint positions, then imported them into MuJoCo for visualization and further modeling. Getting everything into MuJoCo took some trial and error—I needed to create a separate T-pose position to get the joint limits configured correctly. The next step is using inverse kinematics to actually convert the joint positions into joint angles so we can move them on the fly.

The dataset also contains transitions, stored as joint position keyframes, which could be used to “teach” the AI moves—so it doesn’t have to discover everything from scratch.

I’m also pretty new to RL in general, but I’m hoping to learn enough to implement something like DeepMimic to train the AI on basic moves. Still figuring out how to design the reward—maybe something similar to the BJJ competition points system, or calculating submissions based on joint angles and torque. There’s a lot of complexity here.

So here’s the play:

- Short term: Build an RL environment where agents can try to escape pins, sweep, or even “roll” against each other in simplified scenarios. Can an AI discover an armbar on its own? Can it invent a new sweep? I want to find out.

- Bigger picture: If this works, it opens up two wild possibilities. First, letting AIs actually play BJJ could help us understand the game better or even create new moves. Second, a good simulation could be used to generate synthetic pose data—maybe, finally, making a BJJ “roll analyzer” for real-life footage possible.

The project’s still in early days, but if you’re curious (or want to contribute), the code is on GitHub.

If you’re into AI, BJJ, or just hacking on hard problems, reach out—I’d love to jam on this with other curious people.

← Back to projects